Setting up a Local Kubernetes Cluster

May 09, 2020 - 21 min read

Everybody loves containers. Photo by ankitasiddiqui.. Everybody loves containers](/static/c3b5f853c2adfb550789711cc5dbe5d4/faddd/setting-up-local-kubernetes.jpg)

So, you want to play around with Kubernetes

Unless you're living under a rock over the last few years, you'd know that terms like "DevOps" and "infrastructure as code" (IaC) have been thrown around a lot and, chances are, you've already tried to take a peek of the magic and mysterious world of Kubernetes. If so many people praise it, you might as well take a look to see what this all about right?

And this is where things started to go wrong. Suddenly, you have a whole universe of new terminology to understand like "deployment", "pod", "container", "ClusterIP" and many others. On top of that, the extensive documentation on the subject is not exactly beginner-friendly for the most part and many samples or tutorials refer to actual cloud environments, such as AWS or Azure.

If you found yourself in that position (or a similar one) fear not, because I'm here to help you to set up a local Kubernetes cluster, for development or testing purposes, on your machine, using docker. I won't show every kind of object we have available in Kubernetes since we won't use them all, and keep in mind that the explanations given here barely scratch the surface of what Kubernetes can do for you. I will also try to link to the official documentation as much as possible (because these things tend to change a lot over the time), but remember that I'm helping you to do the first steps, not to reach your end destination.

A huge disclaimer: I'm doing all this on a Linux environment. I will reference Windows and Mac commands as best as I can, but if you're on one of those platforms, you might need some additional googling around. For the most part though, Windows and Mac users have an easier time during setup, thanks to Docker Desktop.

Our application

Before we start messing around with Kubernetes, we need an application to be deployed to check if our infrastructure works. Let us quickly create a dead-simple NodeJS project and install express:

# create a new directory for our demo

# and cd to it

$ mkdir -p k8s-demo

$ cd k8s-demo

# initialize a nodejs project

$ npm init -y

Wrote to /home/user/k8s-demo/package.json:

{

"name": "k8s-demo",

"version": "1.0.0",

"description": "",

"main": "index.js",

"scripts": {

"test": "echo \"Error: no test specified\" && exit 1"

},

"keywords": [],

"author": "",

"license": "ISC"

}

# install extra packages

$ npm i -s express

+ express@4.17.1

added 50 packages from 37 contributors in 7.692sNow, replace the scripts section of our package.json(only this section, not the entire file!) with the following snippet:

"scripts": {

"start": "node index.js"

},For those who don't know, this creates a start task on our project, with the command needed to run the application (node index.js). The last part missing is creating the index.js file, with the following contents:

const express = require("express");

const app = express();

app.get("/", (req, res) => {

res.send("<h1>It works!</h1>");

});

app.get("/time", (req, res) => {

res.send(new Date().toISOString());

});

app.listen(8080, () => {

console.log("Server listening at port 8080");

});Even if you've never used express, you probably can guess what this application does. We have two endpoints, / and /time, that shows, respectively, a static It works! message and the current timestamp. You can run this app with the npm start command inside the application folder and check that everything works as expected by pointing your browser to http://localhost:8080.

Creating a Docker image

With our application done, the next step is to create a docker image with it. First, make sure you have docker installed, by using the appropriate installer for your platform. Windows, Mac, and the supported flavors of Linux have an easy installer that 'just works', but if that is not your case, check to see if your distro provides a docker package or use the Linux installation script. Depending on your distro and the method used to install docker, you might need to manually enable the docker service and/or add your current user to the docker group, for example:

# enable the service, on systemd systems

$ sudo systemctl start docker.service

$ sudo systemctl enable docker.service

# enable the service, on runit systems

$ sudo ln -s /etc/sv/docker /var/service/

# add the current use to the docker group

$ sudo usermod -aG docker $USERAfter the install, you likely need to exit your user session or reboot (yes, even on Windows and Mac) to apply all the changes and start the needed services. To check that everything is working as intended, try:

# check the version

$ docker version

Client:

Version: 19.03.8

API version: 1.40

Go version: go1.14

Git commit:

Built: Fri Mar 20 23:10:55 2020

OS/Arch: linux/amd64

Experimental: false

Server:

Engine:

Version: 19.03.8

API version: 1.40 (minimum version 1.12)

Go version: go1.14

Git commit: v19.03.8

Built: Fri Mar 20 23:10:55 2020

OS/Arch: linux/amd64

Experimental: false

containerd:

Version: 1.3.2

GitCommit: UNSET

runc:

Version: spec: 1.0.1-dev

GitCommit:

docker-init:

Version: 0.18.0

GitCommit: fec3683

# run the 'hello world' sample image

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

0e03bdcc26d7: Pull complete

Digest: sha256:8e3114318a995a1ee497790535e7b88365222a21771ae7e53687ad76563e8e76

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Of course, you will probably see slightly different information on your machine, but that is ok. The point here is to check that docker is correctly installed and is working for the current user (you shouldn't need sudo to run these commands). The next step now is to dockerize our application by creating a file with the name Dockerfile on our project root, with the following contents:

FROM node:alpine

WORKDIR /app

COPY package.json .

RUN npm install

COPY index.js .

CMD [ "npm", "start" ]This Dockerfile defines that our image starts from the node:alpine base image. Then, we define /app as our WORKDIR (meaning that everything we do from now on, will be inside the /app directory of the container) and copy the package.json file to the container, issuing an npm install to download and install the project dependencies. In the last stage, we copy our source file, index.js, and declare that the default "run" command of the image will be npm start.

If you never worked with docker before, you might have some doubts about the exact meaning of the commands issued here. If that is the case, please check the official Dockerfile reference. Lastly, to build an image, issue the docker build command inside the application root directory, as shown below:

# create an image, tagging it as `user/demo`. You could use any name you want locally, but

# it is considered a good practice to use the <user>/<name> format when creating

# you custom images

$ docker build -t user/demo .

Sending build context to Docker daemon 2.01MB

Step 1/6 : FROM node:alpine

alpine: Pulling from library/node

cbdbe7a5bc2a: Pull complete

12f343f6cb98: Pull complete

916b594bfc18: Pull complete

e52b3a5de1cd: Pull complete

Digest: sha256:dd23ffe50ad5b5fc6efae6cd71bb42a61e8c11e881b05434afed90734fc33fc9

Status: Downloaded newer image for node:alpine

---> 87c43f8d8077

Step 2/6 : WORKDIR /app

---> Running in de803753fd27

Removing intermediate container de803753fd27

---> 0b00945e04d2

Step 3/6 : COPY package.json .

---> 95e719ab0bb0

Step 4/6 : RUN npm install

---> Running in 0c8e4f558fbc

npm notice created a lockfile as package-lock.json. You should commit this file.

npm WARN k8s-demo@1.0.0 No description

npm WARN k8s-demo@1.0.0 No repository field.

added 50 packages from 37 contributors in 14.268s

Removing intermediate container 0c8e4f558fbc

---> 9fd2e1ce4c9b

Step 5/6 : COPY index.js .

---> 23f9ca1254bf

Step 6/6 : CMD [ "npm", "start" ]

---> Running in 90198ce43550

Removing intermediate container 90198ce43550

---> d010af435b1f

Successfully built d010af435b1f

Successfully tagged user/demo:latest

# check to see if the image is really available

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

user/demo latest d010af435b1f About a minute ago 120MB

node alpine 87c43f8d8077 23 hours ago 117MB

hello-world latest bf756fb1ae65 4 months ago 13.3kB

# run the container, exposing the port 8080 (format: <host_port>:<container_port>)

# if you already have the application unning locally, stop it first!

$ docker run --rm -p 8080:8080 user/demo

> k8s-demo@1.0.0 start /app

> node index.js

Server listening at port 8080If you followed these steps, you should have an image called user/demo, with the latest tag (this is done automatically if we don't specify a tag name), and a container running this image, exposing the results at port 8080. This means that if we try to access http://localhost:8080 again, we should see the same results as before. You can kill the process by using C-c.

So... why did we make all this? Since Kubernetes is "only" a container orchestration platform, we do need to have a minimum understanding of what a container is in practice on how to make a simple container for our applications. Now that we have a working application, an image, and we know that the image work as intended, it is finally time to talk about Kubernetes!

Kubernetes installation

First of all, a small clarification is needed: Kubernetes does not depend on docker (and vice versa). Docker is a container platform, i. e., a platform to create and run "containerized" (dockerized) applications, so it focuses on packaging and distributing an application. On the other hand, Kubernetes is an orchestration platform, so you can better run, monitor, and scale your containerized applications. Comparing Docker with Kubernetes is akin to comparing apples to oranges: they're different tools, for different purposes, that happen to complement each other (for those interested, a better comparison would be comparing Kubernetes with Docker Swarm).

With that out of the way, I have good news for Windows and Mac users: in both cases, all you need to do is to right-click the docker icon on your taskbar, click on Settings > Kubernetes and check the Enable Kubernetes checkbox. Apply your changes (it might take a while) and voilà, Kubernetes is ready to use. If you have any problem, the links below also contain extra information for Windows and Mac users.

Linux users, on the other hand, got the short end of the stick on this one. On Linux, we need to manually install kubectl (the tool to interact with the cluster) and minikube (the tool to create a local development cluster). Supported Linux flavors might already have a kubectl and/or minikube package available, but in any case, you should check the kubectl install docs and the minikube install docs to better decide which is the better path to follow in your distro.

In my case, my distro does not have packages for these binaries and I opted to download the binaries directly. At the time of writing, these were the commands used:

# create a separate directory to hold the downloaded binaries.

# could be anywhere, e. g. ~/.bin, for example

$ mkdir -p /opt/kubernetes

$ cd /opt/kubernetes

# download kubectl and apply executable permissions

$ curl -LO https://storage.googleapis.com/kubernetes-release/release/`curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt`/bin/linux/amd64/kubectl

chmod +x ./kubectl

# download minikube and apply executable permissions

curl -Lo minikube https://storage.googleapis.com/minikube/releases/latest/minikube-linux-amd64

chmod +x minikube

# add the binary location to path.

# remeber to do this on your .profile, .bashrc, .zshrc or similar file

export PATH=$PATH:/opt/kubernetesAfter doing that, we should b able to check our Kubernetes cluster with the kubectl version:

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.2", GitCommit:"52c56ce7a8272c798dbc29846288d7cd9fbae032", GitTreeState:"clean", BuildDate:"2020-04-16T11:56:40Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Unable to connect to the server: dial tcp 172.17.0.2:8443: connect: no route to hostWait a minute... What in the heck is that Unable to connect to the server error? This error menas that we don't have a kubernetes cluster running, and it happens in Linux because our cluster is not automatically managed by docker (Windows/Mac users should not get this error if docker is started and Kubernetes is enabled), but by minikube. This means that we need to manually start our cluster before using it:

# run this command and go make a coffe, because this

# one will take a while...

$ minikube start

minikube v1.9.2

Using the docker driver based on existing profile

Starting control plane node m01 in cluster minikube

Pulling base image ...

docker "minikube" container is missing, will recreate.

Creating Kubernetes in docker container with (CPUs=2) (4 available), Memory=3900MB (15900MB available) ...

Preparing Kubernetes v1.18.0 on Docker 19.03.2 ...

▪ kubeadm.pod-network-cidr=10.244.0.0/16

Enabling addons: default-storageclass, ingress, storage-provisioner

Done! kubectl is now configured to use "minikube"

# time to check the version again

$ kubectl version

Client Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.2", GitCommit:"52c56ce7a8272c798dbc29846288d7cd9fbae032", GitTreeState:"clean", BuildDate:"2020-04-16T11:56:40Z", GoVersion:"go1.13.9", Compiler:"gc", Platform:"linux/amd64"}

Server Version: version.Info{Major:"1", Minor:"18", GitVersion:"v1.18.0", GitCommit:"9e991415386e4cf155a24b1da15becaa390438d8", GitTreeState:"clean", BuildDate:"2020-03-25T14:50:46Z", GoVersion:"go1.13.8", Compiler:"gc", Platform:"linux/amd64"}That's better. Now we successfully have a local Kubernetes cluster created, and we confirmed that we can connect to it and issue commands. To see our installation in action, we will need to create some Kubernetes objects on our cluster to be able to deploy our application.

Deploying an application

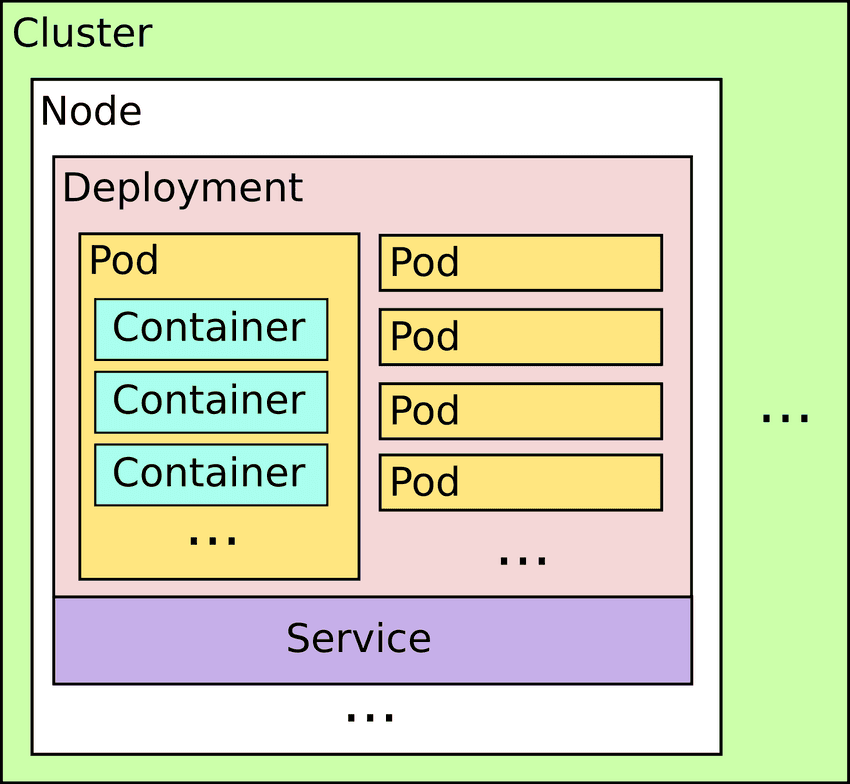

Before we start messing with configuration files, let's pause for a moment to take a look at the image below:

A simplification of Kubernetes infrastructure

Ok... this looks a bit messy, but what we need to know for now is the basic infrastruture objects used by Kubernetes:

- A cluster is a collection of one or more nodes.

- A node in a cluster can be running one or more deployments and services.

- Each deployment controls a set of many (stateless) pods, running on different nodes.

- A pod can contain one or more containers, depending on the needs of the application. Most of the time, however, pods contain a single container, since the scalability is handled by the deployment, not the pod.

- A service is a sort of "gateway" that allows access to the pods. Think about the service as the guy that makes the ports and endpoints of our application accessible e. g. to other pods.

Don't worry if you feel overwhelmed by all this terminology, that's natural. Luckily, in our demo application, we don't need to worry about multiple nodes (we're running a local Kubernetes cluster for testing, remember?), we will need a single deployment, with a single pod, which, inside, will have only one container. In the last step, we will create a single service to make our application accessible.

To start, lets create a deployment with a single pod pointing to our image, by creating the file demo-depl.yaml, with the following content:

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-depl

spec:

replicas: 1

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

containers:

- name: demo

image: user/demoWe start this file specifying apiVersion and kind, so Kubernetes can identify what kind of object we're creating (in this case, a deployment) and which version of the object API is being used (apps/v1, in this example). This is needed to maintain the compatibility with older code on newer Kubernetes versions. We also give this deployment some metadata information, particularly a name, so we can more easily locate it later.

The spec section is where the "meat" of the configuration is at, and right away we inform Kubernetes that we want only one replica of the pods in this deployment. In a real-world scenario, we could later scale our application by incrementing the number of needed replicas. The next property is the all-important selector, where we inform Kubernetes that we will match objects that have a label with app as key and demo as value. These values could be anything we want (e. g. foo: bar), as long as they match the labels defined on the pods we're using. This means that, when we change this deployment, the changes will be applied to all pods with the app: demo label defined. Think about it as the way we can inform Kubernetes which pods belong to which deployments.

After all that, template defines the "skeleton" of the pods to be created for this deployment. We start by specifying the labels metadata (again, make sure it matches the value in the selector), and then define only one container is it's spec, with the name demo and using the previously created docker image user/demo. Since no version is specified, the latest tag will be assumed.

For minikube users, there is an extra step before applying this configuration. Since minikube runs in a "separate" docker environment, we must first set the needed environment variables to be able to properly operate on Kubernetes. To do that, run minikube docker-env and follow the steps. On my machine, this is the output:

# check what we need to do

$ minikube docker-env

export DOCKER_TLS_VERIFY="1"

export DOCKER_HOST="tcp://172.17.0.2:2376"

export DOCKER_CERT_PATH="/home/user/.minikube/certs"

export MINIKUBE_ACTIVE_DOCKERD="minikube"

To point your shell to minikubes docker-daemon, run:

eval $(minikube -p minikube docker-env)

# right, lets do it

$ eval $(minikube -p minikube docker-env)That should do it for the current terminal instance. Remember to repeat this process for every new terminal instance, otherwise, the commands below will likely fail in very cryptic ways. With that out of the way, let's apply the configuration:

# apply the configuration.

# you can also use '.' instead of file name

# to apply all the yaml files on the current directory

$ kubectl apply -f demo-depl.yaml

deployment.apps/demo-depl created

# check the deployments

# did it really worked? why is 'available' zero?

$ kubectl get deployments

NAME READY UP-TO-DATE AVAILABLE AGE

demo-depl 0/1 1 0 13s

# check the pod

# what? why 'ImagePullBackOff'?

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-depl-58db85cd8d-bdwt9 0/1 ImagePullBackOff 0 20sAgain, for Windows or Mac users, this configuration should've worked on the first try, but minikube users still have one more thing to do. Remember when I said that minikube runs in a separate docker environment? Guess what, this means that this environment does not have the image we created previously, meaning that we have to build the image again, on the new environment. So, we need to build this image again, this time on the correct environment, remove the broken deployment, and apply it again:

# notice that our image does not exist on the Kubernetes environment

# if you don't believe, open a new terminal, outside the Kubernetes env

# and compare the output of 'docker image ls'

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.18.0 43940c34f24f 6 weeks ago 117MB

k8s.gcr.io/kube-scheduler v1.18.0 a31f78c7c8ce 6 weeks ago 95.3MB

k8s.gcr.io/kube-apiserver v1.18.0 74060cea7f70 6 weeks ago 173MB

k8s.gcr.io/kube-controller-manager v1.18.0 d3e55153f52f 6 weeks ago 162MB

kubernetesui/dashboard v2.0.0-rc6 cdc71b5a8a0e 7 weeks ago 221MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 2 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 3 months ago 43.8MB

kindest/kindnetd 0.5.3 aa67fec7d7ef 6 months ago 78.5MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 6 months ago 288MB

kubernetesui/metrics-scraper v1.0.2 3b08661dc379 6 months ago 40.1MB

gcr.io/k8s-minikube/storage-provisioner v1.8.1 4689081edb10 2 years ago 80.8MB

# build the image, again (output omitted)

$ docker build -t user/demo .

# now it should be listed as an available image

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

user/demo latest 2074e10a74bf 5 seconds ago 120MB

node alpine 87c43f8d8077 27 hours ago 117MB

k8s.gcr.io/kube-proxy v1.18.0 43940c34f24f 6 weeks ago 117MB

k8s.gcr.io/kube-scheduler v1.18.0 a31f78c7c8ce 6 weeks ago 95.3MB

k8s.gcr.io/kube-apiserver v1.18.0 74060cea7f70 6 weeks ago 173MB

k8s.gcr.io/kube-controller-manager v1.18.0 d3e55153f52f 6 weeks ago 162MB

kubernetesui/dashboard v2.0.0-rc6 cdc71b5a8a0e 7 weeks ago 221MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 2 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 3 months ago 43.8MB

kindest/kindnetd 0.5.3 aa67fec7d7ef 6 months ago 78.5MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 6 months ago 288MB

kubernetesui/metrics-scraper v1.0.2 3b08661dc379 6 months ago 40.1MB

quay.io/kubernetes-ingress-controller/nginx-ingress-controller 0.26.1 29024c9c6e70 7 months ago 483MB

gcr.io/k8s-minikube/storage-provisioner v1.8.1 4689081edb10 2 years ago 80.8MB

# remove the deployment

$ kubectl delete -f demo-depl.yaml

deployment.apps "demo-depl" deleted

# lets try again

$ kubectl apply -f demo-depl.yaml

deployment.apps/demo-depl created

# what? again?

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-depl-58db85cd8d-md4br 0/1 ImagePullBackOff 0 16sWell, there is one tiny little problem remaining. Since we used (implicitly) the latest tag of the image, Kubernetes is trying to download the image from Docker Hub and failing (because it doesn't exist, obviously). The solution is to add an imagePullPolicy on our pod template specifying that it should only happen when the image doesn't exist locally (IfNotPresent) or that it should never pull (Never). This is done on the container array of the demo-depl.yaml, that should end up looking like this:

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo-depl

spec:

replicas: 1

selector:

matchLabels:

app: demo

template:

metadata:

labels:

app: demo

spec:

containers:

- name: demo

image: user/demo

imagePullPolicy: IfNotPresent # <<--- add this lineTry again and now you should have a running pod:

$ kubectl delete -f demo-depl.yaml

deployment.apps "demo-depl" deleted

$ kubectl apply -f demo-depl.yaml

deployment.apps/demo-depl created

$ kubectl get pods

NAME READY STATUS RESTARTS AGE

demo-depl-5674bb5794-6ls8w 1/1 Running 0 5sFinally! But the question now is how can we "see" our running application? If you tried to point the browser to http://localhost:8080, you will notice that it is not answering our requests. This happens because we're missing one last object in our configuration...

Creating a service

The service, as I said earlier, is the "thing" that makes our application accessible. There are various types of services on Kubernetes, but for this demo, we will use the NodePort service, which is designed for quick testing on local environments (in production, you should use a more robust solution, like an NGINX Ingress Controller). To do that, we need to create a new file, demo-svc.yaml, with the following contents:

apiVersion: v1

kind: Service

metadata:

name: demo-svc

spec:

type: NodePort

selector:

app: demo

ports:

- name: demo

protocol: TCP

port: 8080

targetPort: 8080In this file, targetPort is the port that our container uses (remember, on our application we defined that as 8080) and port is the port that this service will leave open when talking inside the node. They don't need to be the same value, but we will leave them equal to be easier to remember. The rest of the configuration (namely, name and selector) have the same meaning as explained earlier.

Let's apply that file and see what happens:

# apply the configuration

$ kubectl apply -f demo-svc.yaml

service/demo-svc created

# Look to our little service

$ kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

demo-svc NodePort 10.104.57.27 <none> 8080:32246/TCP 17s

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d23hNotice the "crazy" port number after 8080 (the number on your machine will most certainly be different). This is the port where we can access our application "from outside" (we could define this by using nodePort on the service file). Try to reach http://localhost:32246 (or whatever your port number is) and you will see our application running!

That is unless you're using minikube. For Linux users you need to specify your local node ip, that you can get with the minikube ip command:

# discover your node IP

$ minikube ip

172.17.0.2

# now, try requesting to the correct address

$ curl 172.17.0.2:32246

<h1>It works!</h1>And that's it! We created an application, "dockerized" it and created the minimum number of infrastructure objects to be able to run it with local Kubernetes cluster. Now you have a working environment that you can use to dig deeper and play around with kubectl and friends. If you wish, the source code can be downloaded here.

That's it for today. Remember that this is the first step and we barely scratched the surface of what Kubernetes can do on our deployment workflow. I've helped you with the first step, now it's on you to continue the journey!

Code Overload

Personal blog ofRafael Ibraim.

Rafael Ibraim works as a developer since the early 2000's (before it was cool). He is passionate about creating clean, simple and maintainable code.

He lives a developer's life.